martes, 4 de julio de 2023

viernes, 24 de marzo de 2023

ORACLE GOLDENGATE

This post covers Overview & Components of Oracle GoldenGate (software for real-time data integration and replication in heterogeneous IT Systems).

Oracle Goldengate is a must-know for DBAs & consists of following components:

- Manager

- Extract

- Data Pump

- Collector

- Replicat

- Trails Files

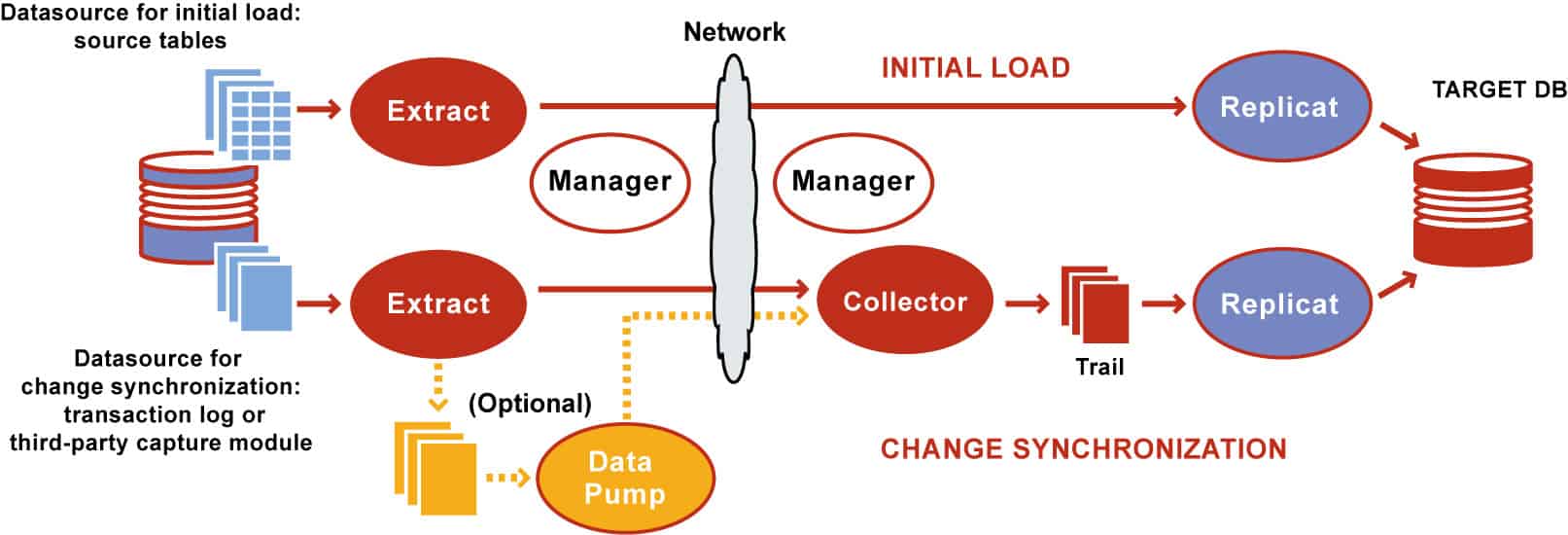

1. Extract

Oracle GoldenGate extract process resides on the source system and captures the committed transactions from the source database. The DB logs may contain committed as well as uncommitted data but, remember, extract process captures only committed transactions and write them to local trail files. It is important to note that Extract captures only the committed transaction from its data source.

The extract can be configured for any of the following purposes:

- Initial Load: For the Initial Load method of replication, extract captures a static set of data directly from the source table or objects.

- Change Synchronization: In this method of replication, extract process continuously captures data (DML and DDL) from the source database to keep the source and target database in a consistent state of replication and it is the sole method to implement continuous replication between the source and target database.

The data source of the extract process could be one of the following

- Source table (if the extract is configured for initial load)

- The database transaction logs or recovery logs such as (Oracle Redo Logs, Oracle Archive Logs, or SQL audit trails or Sybase transaction logs) depending on the type of source database.

- Third-party capture module can also be used to extract transactional data from the source database. In this method, the data and metadata from an external API are passed to the extract API.

Extract captures changes from the source database based on the extract configuration (contains the objects to be replicated from the source database).

Multiple extract processes can be configured on a source database to operate on same/different source objects.

The extract performs either of the following tasks after extracting the data/records from the source database objects.

- Delivers the data extracted from the source to the target server Trail Files through the collector process

- Writes the data extracted from the source on to the Local Trail Files on the source system

Optionally, Extract can also be configured to perform data filtering, transformation and mapping while capturing data and or before transferring the data to the target system.

2. DataPump

This is an optional GoldenGate process (server process) on the source system and comes into picture when the extracted data from the source is not directly transferred to the target Trail Files. In the DataPump setup, the extract process gets the records/data from a source and keeps it in the local file system by means of local Trail Files. The DataPump acts as a secondary extract process where it reads the records from Local Trail Files and delivers to the Target system Trail files through the collector.

Data Pump is also known as secondary extract process. It is always recommended to include data Pump in Goldengate configuration.

3. Collector

The collector is a server process that runs in the background on the target system in a GoldenGate replication setup where the extract is configured for continuous Change Synchronization.

Collector has the following roles to perform in the GoldenGate replication.

- When a connection request is sent from the source extract, the collector process on the target system scan and map the requesting connection to the available port and send the port details back to the manager for assignment to the requesting extract process.

- Collector receives the data sent by source extract process and writes them to Trail Files on the target system.

There is one collector process on the target system per one extract process on the source system, i.e it is a one to one mapping between extract and collector process.

4. Replicat

The Replicat process runs on the target system and is primarily responsible for replicating the extracted data delivered to the target trail files by the source extract process.

The replicat process scans the Trail Files on the target system, generates the DDL and DML from the Trail Files and finally applies them on to the target system.

Replicat has the following two types of configuration which relate to the type of extract being configured on the source system.

- Initial loads: In initial data loads configuration, Replicat can apply a static data copy which is extracted by the Initial load extract to target objects or route it to a high-speed bulk-load utility.

- Change synchronization: In change synchronization configuration, Replicat applies the continuous stream of data extracted from the source objects to the target objects using a native database interface or ODBC drivers, depending on the type of the target database.

Optionally, Replicat can also be configured to perform data filtering, transformation, and mapping before applying the transaction on to the target database

5. Trail or Extract Files

Trails or Extract Files are the Operating system files which GoldenGate use to keep records extracted from the source objects by the extract process. Trail files can be created on the source system and target system depending on the GoldenGate replication setup. Trail Files on the source system are called Extract Trails or Local Trails and on the target system called as Remote Trails.

Trail files are the reason why Goldengate is platform-independent.

By using trail GoldenGate minimizes the load on the source database as once the transaction logs/online logs/redo logs/ archive logs are extracted and loaded by the extract process to trail files, all the operations like filtering, conversions, mapping happens out of the source database. Use of trail file also makes the extraction and replication process independent of each other.

6. Manager

Manager can be considered as the parent process in a GoldenGate replication setup on both the source and target system. Manager, controls, manages and maintains the functioning of other GoldenGate processes and files. A manager process is responsible for the following tasks.

- Starting up Oracle GoldenGate processes

- Maintaining port number for processes

- Starting up dynamic processes

- Performing GoldenGate Trail Management

- Creating events, errors and threshold report.